- バックアップ一覧

- 差分 を表示

- 現在との差分 を表示

- ソース を表示

- 7.実践編 へ行く。

- 1 (2010-07-14 (水) 17:40:14)

- 2 (2010-07-14 (水) 20:19:44)

- 3 (2010-07-15 (木) 00:07:29)

- 4 (2010-07-15 (木) 13:41:50)

- 5 (2010-07-21 (水) 12:27:05)

- 6 (2010-07-21 (水) 23:23:55)

- 7 (2010-07-22 (木) 00:52:46)

- 8 (2010-07-22 (木) 10:15:00)

- 9 (2010-07-22 (木) 12:34:39)

- 10 (2010-07-22 (木) 13:53:00)

- 11 (2010-07-22 (木) 20:51:23)

- 12 (2010-07-23 (金) 09:25:49)

- 13 (2010-07-23 (金) 16:05:34)

- 14 (2010-07-27 (火) 15:11:07)

- 15 (2010-07-29 (木) 15:29:22)

- 16 (2010-08-16 (月) 11:59:53)

- 17 (2011-07-13 (水) 20:38:34)

- 18 (2011-07-20 (水) 20:49:05)

- 19 (2011-07-21 (木) 11:32:31)

- 20 (2011-07-21 (木) 15:17:08)

- 21 (2011-07-26 (火) 14:31:02)

- 22 (2011-07-27 (水) 19:24:22)

- 23 (2011-07-28 (木) 18:10:42)

- 24 (2011-07-30 (土) 16:29:58)

- 25 (2011-07-31 (日) 17:41:32)

- 26 (2012-06-20 (水) 16:43:09)

- 27 (2012-11-01 (木) 21:18:14)

- 28 (2013-02-17 (日) 10:03:31)

- 29 (2013-05-14 (火) 00:36:08)

- 30 (2013-06-19 (水) 20:46:21)

- 31 (2013-07-18 (木) 09:43:32)

- 32 (2014-07-17 (木) 11:00:59)

- 33 (2014-07-18 (金) 09:03:36)

- 34 (2014-07-18 (金) 09:03:48)

- 35 (2015-07-16 (木) 11:32:39)

- 36 (2016-07-21 (木) 10:36:50)

- 37 (2016-07-28 (木) 11:32:05)

- 38 (2017-07-19 (水) 20:09:16)

- 39 (2017-07-19 (水) 20:09:46)

- 40 (2017-07-20 (木) 14:49:27)

- 41 (2017-07-24 (月) 16:23:38)

- 42 (2017-07-27 (木) 14:50:35)

- 43 (2017-07-27 (木) 18:30:54)

現在(2017-08-03 (木) 11:54:42)作成中です。 既に書いている内容も大幅に変わる可能性が高いので注意。

神戸大学 大学院システム情報学研究科 計算科学専攻 陰山 聡

【目次】

2次元並列化 †

- 引き続き、正方形領域の熱伝導問題(平衡温度分布)を解く例題を扱う。

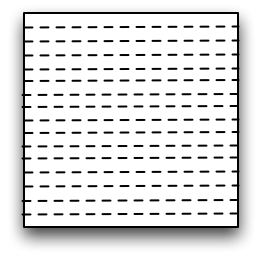

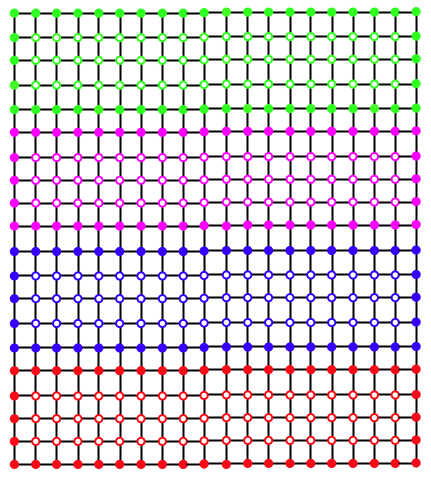

- これまでMPIで並列化を行うにあたり、正方形領域のy方向(j方向)に複数の領域に分割し、 それぞれの領域に一つずつMPIプロセスを割り当てて並列化していた。 このような並列化を1次元領域分割による並列化という。 下の図は正方形領域を16個の領域に分割した例である。

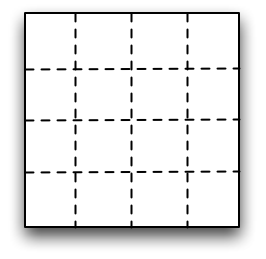

- 同様に二次元領域分割による並列化も考えられる。 正方形を16個の領域に2次元的に分割すると下の図のようになる。

- 上の二つの図はどちらも16個のMPIプロセスで並列化しているので、 計算速度の点で見ればどちらも同じと思うかもしれない。

- だがそれは違う。 プロセス間の通信にかかる時間がゼロであれば、そのとおりだが、実際にはプロセス間の通信(MPI_SENDやMPI_RECV等)には有限の―それどころかかなり長い―時間がかかる。

- では、プロセス間通信に長い時間がかかるという前提の下で、 1次元領域分割と、2次元領域分割ではどちらが計算が速いであろうか? 2次元領域分割にも様々な分割方法があるが、その中で最も最適な分割方法は何であろうか?

計算と通信 †

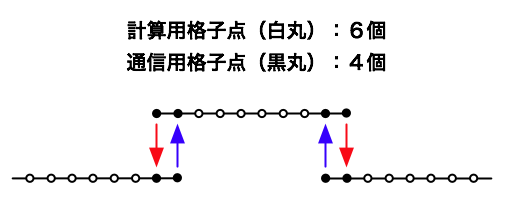

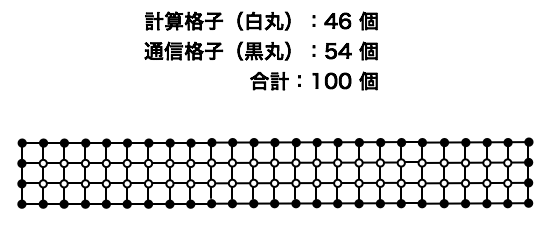

- 1次元空間を格子点で離散化した上で、MPIでプロセス間通信を行う場合を考える。

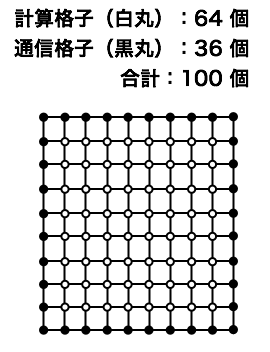

- 計算格子には2種類ある。一つは、その上で計算だけを行う格子。 もう一つはMPI通信のデータを送ったり、受けたりする格子である。 (一番外側から2番目の格子は計算も通信も行う。)

- 一つのMPIプロセスが行う通信の量は、通信を行う格子点の数に比例する。 通信に時間がかかる場合、通信を行う格子点は少ないほうが望ましい。

1次元領域分割と2次元領域分割 †

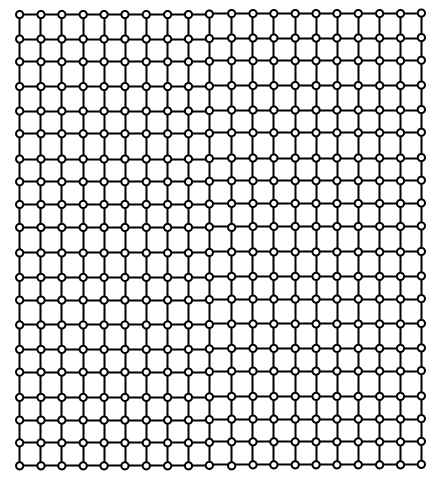

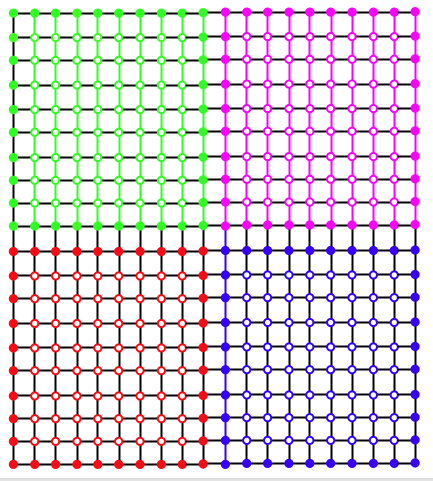

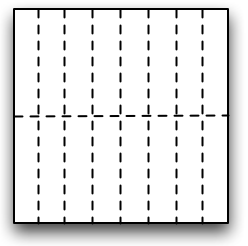

- 下の図は正方形領域を400個の格子点で離散化した場合を示す。

- これを4つのMPIプロセスで並列化することを考える。 1次元領域分割の場合、下の図のようになる。

- 2次元領域分割の場合、同じく4つのMPIプロセスで並列化すると、下の図のようになる。

- 明らかにプロセスあたりの通信量は2次元領域分割の方が少ない。

2次元領域分割の方法 †

- 同じMPIプロセス数による2次元領域分割でも様々な可能性がある。 たとえば、正方形を16個の長方形に分割する場合、次の二つではどちらが通信量が少ないであろうか?

- 正方形を16個に分割するのだから、どちらも方法でも一つのMPIプロセスが担当する面積は等しい(元の正方形の16分の1)。

レポート課題 2 †

- 正方形領域をx方向にm個、y方向にn個、合計 mn個のMPIプロセスを用いて2次元領域分割をする場合を考える。 mn=16の時、最適な領域分割数mとnは何か。 その根拠を述べよ。

- ヒントは下の図:

2次元領域分割による並列コード †

- 領域分割による並列化を行うときに注意すべき点は、 MPIプロセスの配置方法である。 2次元領域分割の場合、あるプロセスに注目すると、最も頻繁に通信する相手は、隣(東西南北)の4つのプロセスである。 計算機のネットワークの配線方法(トポロジー)等に基づく通信性能の特性を考慮に入れて、 隣同士の通信がもっとも通信速度的に「近い」位置に配置することが望ましい。

- MPI関数の一つMPI_CART_CREATEを使えば、この点を自動的に考慮した最適な配置でプロセスを多次元に分配してくれる。

- 最後に、MPI_CART_CREATEを用いた2次元領域分割法による熱伝導問題のコード thermal_diffusion_decomp2d.f90 を示す。

!=========================================================================

! thermal_diffusion_decomp2d.f90

!

! Purpose:

! To calcuate thermal equilibrium state, or the temperature

! distribution T(x,y) in a unit square, 0<=(x,y)<=1.

! Heat source is constant and uniform in the square.

! Temperature's boundary condition is fixed; T=0 on the four

! sides.

!

! Method:

! 2nd order finite difference approximation for the Poisson

! equation of the temperature,

! \nabla^2 T(x,y) + heat_source = 0.

! leads to

! T(i,j) = (T(i+1,j)+T(i-1,j)+T(i,j+1)+T(i,j-1)) / 4 &

! + heat_source*h^2/4,

! where the grid spacing, h, is same and uniform in x-

! and y-directions. Jacobi method is used to solve this.

!

! Parallelization:

! MPI parallelization under 2-dimensional domain decomposition.

!

! Reference codes:

! - "thermal_diffusion.f90" is a companion code that is

! parallelized with a 1-D domain decomposition.

! - "sample_birdseyeview.gp" is a gnuplot script to visualize

! T(i,j) distribution in the square, produced by the routine

! temperature__output_2d_profile in this code.

!

! Coded by Akira Kageyama,

! at Kobe University,

! on 2010.07.15,

! for the Lecture Series "Computational Science" (2010).

!=========================================================================

module constants

implicit none

integer, parameter :: SP = kind(1.0)

integer, parameter :: DP = selected_real_kind(2*precision(1.0_SP))

integer, parameter :: MESH_SIZE = 61

integer, parameter :: LOOP_MAX = 10000

end module constants

module parallel

use constants

use mpi

implicit none

private

public :: parallel__initialize, &

parallel__i_am_on_border, &

parallel__communicate, &

parallel__finalize, &

parallel__set_prof_2d, &

parallel__tellme

type ranks_

integer :: me

integer :: north, south, west, east

end type ranks_

type(ranks_) :: ranks

integer, parameter :: ndim = 2 ! 2-D domain decomposition

type process_topology_

integer :: comm

integer, dimension(ndim) :: dims

integer, dimension(ndim) :: coords

end type process_topology_

type(process_topology_) :: cart2d

integer :: nprocs

integer :: istt, iend

integer :: jstt, jend

contains

!== Private ==!

subroutine dataTransferToEast(n,sent_vect, recv_vect)

integer, intent(in) :: n

real(DP), dimension(n), intent(in) :: sent_vect

real(DP), dimension(n), intent(out) :: recv_vect

integer :: ierr

integer, dimension(MPI_STATUS_SIZE) :: status

if ( mod(cart2d%coords(1),2)==0 ) then

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%east, &

0, cart2d%comm, ierr)

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%west, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

else

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%west, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%east, &

0, cart2d%comm, ierr)

end if

end subroutine dataTransferToEast

subroutine dataTransferToNorth(n,sent_vect, recv_vect)

integer, intent(in) :: n

real(DP), dimension(n), intent(in) :: sent_vect

real(DP), dimension(n), intent(out) :: recv_vect

integer :: ierr

integer, dimension(MPI_STATUS_SIZE) :: status

if ( mod(cart2d%coords(2),2)==0 ) then

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%north, &

0, cart2d%comm, ierr)

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%south, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

else

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%south, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%north, &

0, cart2d%comm, ierr)

end if

end subroutine dataTransferToNorth

subroutine dataTransferToSouth(n,sent_vect, recv_vect)

integer, intent(in) :: n

real(DP), dimension(n), intent(in) :: sent_vect

real(DP), dimension(n), intent(out) :: recv_vect

integer :: ierr

integer, dimension(MPI_STATUS_SIZE) :: status

if ( mod(cart2d%coords(2),2)==0 ) then

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%south, &

0, cart2d%comm, ierr)

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%north, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

else

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%north, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%south, &

0, cart2d%comm, ierr)

end if

end subroutine dataTransferToSouth

subroutine dataTransferToWest(n,sent_vect, recv_vect)

integer, intent(in) :: n

real(DP), dimension(n), intent(in) :: sent_vect

real(DP), dimension(n), intent(out) :: recv_vect

integer :: ierr

integer, dimension(MPI_STATUS_SIZE) :: status

if ( mod(cart2d%coords(1),2)==0 ) then

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%west, &

0, cart2d%comm, ierr)

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%east, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

else

call MPI_RECV(recv_vect, n, MPI_DOUBLE_PRECISION, ranks%east, &

MPI_ANY_TAG, cart2d%comm, status, ierr)

call MPI_SEND(sent_vect, n, MPI_DOUBLE_PRECISION, ranks%west, &

0, cart2d%comm, ierr)

end if

end subroutine dataTransferToWest

!== Public ==!

subroutine parallel__communicate(ism1,iep1,jsm1,jep1,field)

integer, intent(in) :: ism1, iep1, jsm1, jep1

real(DP), dimension(ism1:iep1,jsm1:jep1), intent(inout) :: field

integer :: ierr

integer, dimension(MPI_STATUS_SIZE) :: status

integer :: i, j, istt, iend, jstt, jend, isize, jsize

real(DP), dimension(:), allocatable :: sent_vect, recv_vect

istt = ism1 + 1

iend = iep1 - 1

jstt = jsm1 + 1

jend = jep1 - 1

isize = iep1 - ism1 + 1

allocate(sent_vect(isize),recv_vect(isize))

sent_vect(1:isize) = field(ism1:iep1,jend)

call dataTransferToNorth(isize,sent_vect,recv_vect)

field(ism1:iep1,jsm1) = recv_vect(1:isize)

sent_vect(1:isize) = field(ism1:iep1,jstt)

call dataTransferToSouth(isize,sent_vect,recv_vect)

field(ism1:iep1,jep1) = recv_vect(1:isize)

deallocate(sent_vect,recv_vect)

jsize = jend - jstt + 1

allocate(sent_vect(jsize),recv_vect(jsize))

sent_vect(1:jsize) = field(iend,jstt:jend)

call dataTransferToEast(jsize,sent_vect,recv_vect)

field(ism1,jstt:jend) = recv_vect(1:jsize)

sent_vect(1:jsize) = field(istt,jstt:jend)

call dataTransferToWest(jsize,sent_vect,recv_vect)

field(iep1,jstt:jend) = recv_vect(1:jsize)

deallocate(sent_vect,recv_vect)

end subroutine parallel__communicate

subroutine parallel__finalize

integer :: ierr

call mpi_finalize(ierr)

end subroutine parallel__finalize

function parallel__i_am_on_border(which) result(answer)

character(len=*), intent(in) :: which

logical :: answer

answer = .false.

if ( which=='west' .and.cart2d%coords(1)==0 ) answer = .true.

if ( which=='east' .and.cart2d%coords(1)==cart2d%dims(1)-1 ) answer = .true.

if ( which=='south'.and.cart2d%coords(2)==0 ) answer = .true.

if ( which=='north'.and.cart2d%coords(2)==cart2d%dims(2)-1 ) answer = .true.

end function parallel__i_am_on_border

subroutine parallel__initialize

logical, dimension(ndim) :: is_periodic

integer :: ierr

logical :: reorder

call mpi_init(ierr)

call mpi_comm_size(MPI_COMM_WORLD, nprocs, ierr)

call mpi_comm_rank(MPI_COMM_WORLD, ranks%me, ierr)

cart2d%dims(:) = 0 ! required by mpi_dims_create

call mpi_dims_create(nprocs, ndim, cart2d%dims, ierr)

is_periodic(1) = .false.

is_periodic(2) = .false.

reorder = .true.

call MPI_CART_CREATE(MPI_COMM_WORLD, ndim, cart2d%dims, &

is_periodic, reorder, cart2d%comm, ierr)

call MPI_CART_SHIFT(cart2d%comm, 0, 1, &

ranks%west, ranks%east, ierr)

call MPI_CART_SHIFT(cart2d%comm, 1, 1, &

ranks%south, ranks%north, ierr)

call MPI_CART_COORDS(cart2d%comm, ranks%me, 2, cart2d%coords, ierr)

print *,' dims(1) = ', cart2d%dims(1)

print *,' dims(2) = ', cart2d%dims(2)

print *,' coords(1) = ', cart2d%coords(1)

print *,' coords(2) = ', cart2d%coords(2)

istt = MESH_SIZE * cart2d%coords(1) / cart2d%dims(1) + 1

iend = MESH_SIZE * (cart2d%coords(1)+1) / cart2d%dims(1)

jstt = MESH_SIZE * cart2d%coords(2) / cart2d%dims(2) + 1

jend = MESH_SIZE * (cart2d%coords(2)+1) / cart2d%dims(2)

print *,' istt = ', istt, ' iend = ', iend

print *,' jstt = ', jstt, ' jend = ', jend

print *,' north = ', ranks%north

print *,' south = ', ranks%south

print *,' west = ', ranks%west

print *,' east = ', ranks%east

print *,' i_am_on_border("west") = ', parallel__i_am_on_border("west")

print *,' i_am_on_border("east") = ', parallel__i_am_on_border("east")

print *,' i_am_on_border("north") = ', parallel__i_am_on_border("north")

print *,' i_am_on_border("south") = ', parallel__i_am_on_border("south")

end subroutine parallel__initialize

function parallel__set_prof_2d(ism1,iep1, &

jsm1,jep1, &

istt_,iend_, &

jstt_,jend_, &

myprof) result(prof_2d)

integer, intent(in) :: ism1

integer, intent(in) :: iep1

integer, intent(in) :: jsm1

integer, intent(in) :: jep1

integer, intent(in) :: istt_

integer, intent(in) :: iend_

integer, intent(in) :: jstt_

integer, intent(in) :: jend_

real(DP), dimension(ism1:iep1,jsm1:jep1), intent(in) :: myprof

real(DP), dimension(0:MESH_SIZE+1,0:MESH_SIZE+1) :: prof_2d

real(DP), dimension(0:MESH_SIZE+1,0:MESH_SIZE+1) :: work

integer :: ierr

integer :: meshsize_p1_sq = (MESH_SIZE+1)**2

work(:,:) = 0.0_DP

work(istt_:iend_,jstt_:jend_) = myprof(istt_:iend_,jstt_:jend_)

call mpi_allreduce(work(1,1), & ! source

prof_2d(1,1), & ! target

meshsize_p1_sq, &

MPI_DOUBLE_PRECISION, &

MPI_SUM, &

cart2d%comm, &

ierr)

end function parallel__set_prof_2d

function parallel__tellme(which) result(val)

character(len=*), intent(in) :: which

integer :: val

select case (which)

case ('rank_east')

val = ranks%east

case ('rank_north')

val = ranks%north

case ('rank_south')

val = ranks%south

case ('rank_west')

val = ranks%west

case ('rank_me')

val = ranks%me

case ('iend')

val = iend

case ('istt')

val = istt

case ('jend')

val = jend

case ('jstt')

val = jstt

case ('nprocs')

val = nprocs

case default

print *, 'Bad arg in parallel__tellme.'

call parallel__finalize

stop

end select

end function parallel__tellme

end module parallel

module temperature

use constants

use parallel

implicit none

private

public :: temperature__initialize, &

temperature__finalize, &

temperature__output_2d_profile, &

temperature__update

real(DP), allocatable, dimension(:,:) :: temp

real(DP), allocatable, dimension(:,:) :: work

real(DP), parameter :: SIDE = 1.0_DP

real(DP) :: h = SIDE / (MESH_SIZE+1)

real(DP) :: heat

integer :: istt, iend, jstt, jend

integer :: myrank, north, south, west, east, nprocs

contains

!=== Private ===

subroutine boundary_condition

if ( parallel__i_am_on_border('west') ) temp( 0,jstt-1:jend+1) = 0.0_DP

if ( parallel__i_am_on_border('east') ) temp( MESH_SIZE+1,jstt-1:jend+1) = 0.0_DP

if ( parallel__i_am_on_border('north') ) temp(istt-1:iend+1, MESH_SIZE+1) = 0.0_DP

if ( parallel__i_am_on_border('south') ) temp(istt-1:iend+1, 0) = 0.0_DP

end subroutine boundary_condition

!=== Public ===

subroutine temperature__initialize

real(DP) :: heat_source = 4.0

istt = parallel__tellme('istt')

iend = parallel__tellme('iend')

jstt = parallel__tellme('jstt')

jend = parallel__tellme('jend')

myrank = parallel__tellme('rank_me')

north = parallel__tellme('rank_north')

south = parallel__tellme('rank_south')

east = parallel__tellme('rank_east')

west = parallel__tellme('rank_west')

nprocs = parallel__tellme('nprocs')

allocate(temp(istt-1:iend+1,jstt-1:jend+1))

allocate(work( istt:iend , jstt:jend) )

heat = (heat_source/4) * h * h

temp(:,:) = 0.0_DP ! initial condition

end subroutine temperature__initialize

subroutine temperature__finalize

deallocate(work,temp)

end subroutine temperature__finalize

subroutine temperature__output_2d_profile

real(DP), dimension(0:MESH_SIZE+1, &

0:MESH_SIZE+1) :: prof

integer :: counter = 0 ! saved

integer :: ierr ! use for MPI

integer :: istt_, iend_, jstt_, jend_

character(len=4) :: serial_num ! put on file name

character(len=*), parameter :: base = "../data/temp.2d."

integer :: i, j

call set_istt_and_iend

call set_jstt_and_jend

write(serial_num,'(i4.4)') counter

prof(:,:) = parallel__set_prof_2d(istt-1, iend+1, &

jstt-1, jend+1, &

istt_, iend_, &

jstt_, jend_, &

temp)

if ( myrank==0 ) then

open(10,file=base//serial_num)

do j = 0 , MESH_SIZE+1

do i = 0 , MESH_SIZE+1

write(10,*) i, j, prof(i,j)

end do

write(10,*)' ' ! gnuplot requires a blank line here.

end do

close(10)

end if

counter = counter + 1

contains

subroutine set_istt_and_iend

istt_ = istt

iend_ = iend

if ( parallel__i_am_on_border('west') ) then

istt_ = 0

end if

if ( parallel__i_am_on_border('east') ) then

iend_ = MESH_SIZE+1

end if

end subroutine set_istt_and_iend

subroutine set_jstt_and_jend

jstt_ = jstt

jend_ = jend

if ( parallel__i_am_on_border('south') ) then

jstt_ = 0

end if

if ( parallel__i_am_on_border('north') ) then

jend_ = MESH_SIZE+1

end if

end subroutine set_jstt_and_jend

end subroutine temperature__output_2d_profile

subroutine temperature__update

integer :: i, j

call parallel__communicate(istt-1,iend+1,jstt-1,jend+1,temp)

call boundary_condition

do j = jstt , jend

do i = istt , iend

work(i,j) = (temp(i-1,j)+temp(i+1,j)+temp(i,j-1)+temp(i,j+1))*0.25_DP &

+ heat

end do

end do

temp(istt:iend,jstt:jend) = work(istt:iend,jstt:jend)

end subroutine temperature__update

end module temperature

program thermal_diffusion_decomp2d

use constants

use parallel

use temperature

implicit none

integer :: loop

call parallel__initialize

call temperature__initialize

call temperature__output_2d_profile

do loop = 1 , LOOP_MAX

call temperature__update

if ( mod(loop,100)==0 ) call temperature__output_2d_profile

end do

call temperature__finalize

call parallel__finalize

end program thermal_diffusion_decomp2d

授業アンケート †

今回の演習内容はどうでしたか?(どれか一つ、一度だけ押してください。)

質問、コメントなど自由にどうぞ †

「お名前」欄は空欄で可。

コメントはありません。 コメント/7.実践編?

as of 2025-08-23 (土) 04:31:48 (7734)